The Energy Crisis Hidden in Plain Sight: Understanding AI’s Massive Power Demands

AI energy resource consumption is rapidly becoming one of the most pressing infrastructure challenges of our time. Here’s what you need to know:

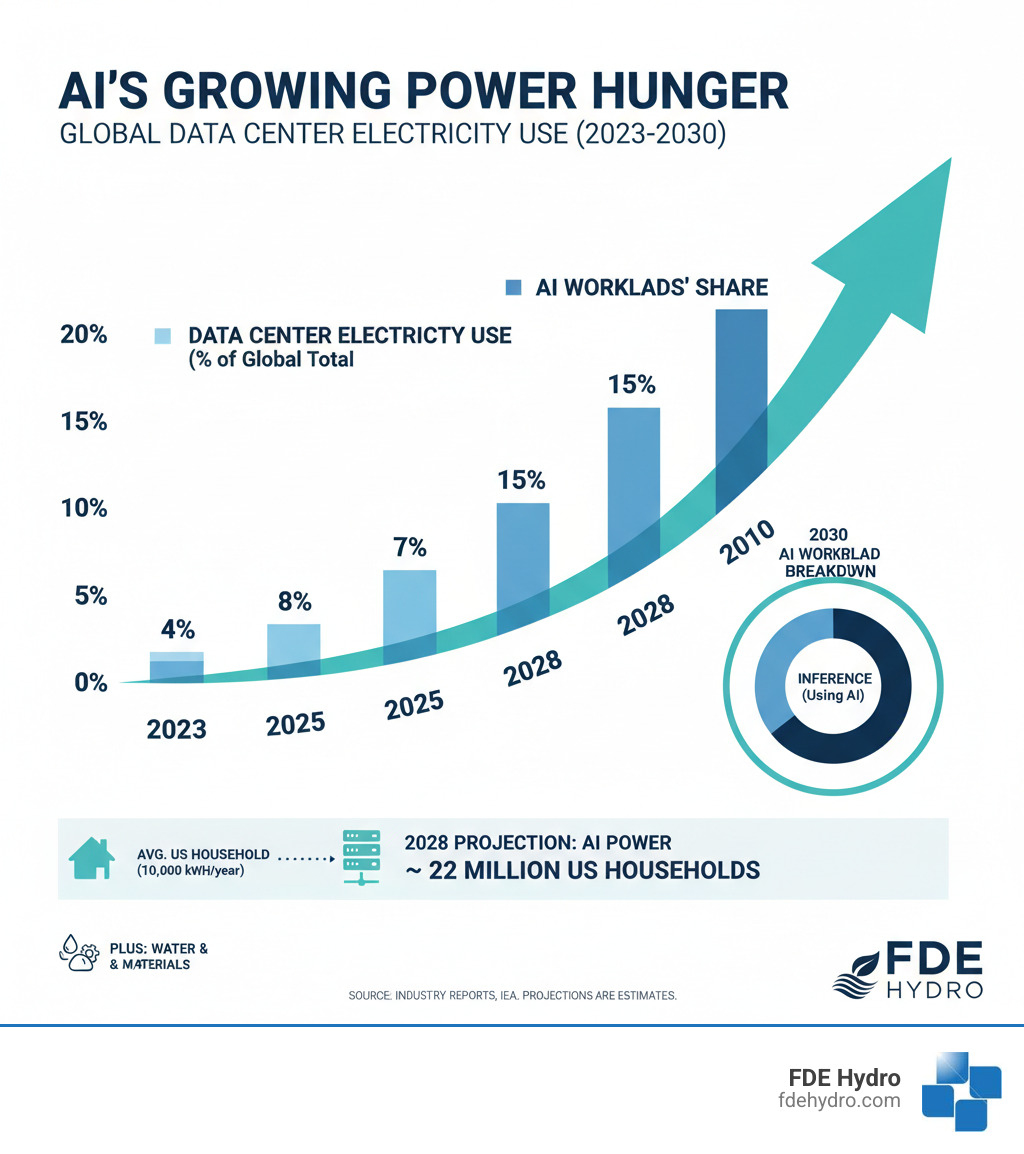

- Current Scale: Data centers consumed 4.4% of U.S. electricity in 2023, with AI workloads driving the majority of growth

- Projected Growth: By 2028, AI could consume as much electricity annually as 22% of all U.S. households

- Hidden Costs: Beyond electricity, AI infrastructure consumes millions of gallons of water and requires 800 kg of raw materials per 2 kg of computing hardware

- Transparency Gap: Most technology companies treat energy usage as a trade secret, making accurate measurement nearly impossible

- Global Impact: By 2030-2035, data centers could account for 20% of global electricity use

Two months after ChatGPT’s release in November 2022, it had reached 100 million active users. Suddenly, everyone was racing to deploy more generative AI. But behind every chatbot conversation, image generation, and AI-powered recommendation sits a massive, power-hungry infrastructure that most people never see.

The numbers are staggering. A single query through an AI chatbot consumes 10 times the electricity of a standard web search. Training large language models can consume 50 gigawatt-hours of energy—enough to power a major city for three days. And this is just the beginning.

The AI revolution isn’t just a software story. It’s fundamentally an energy infrastructure challenge. As AI becomes embedded in everything from smartphones to industrial operations, the question isn’t whether we can build the models—it’s whether we can power them sustainably.

This matters because the decisions we make today about AI energy resource planning will shape decades of infrastructure investment, electricity costs, and environmental impact. For those managing large-scale infrastructure projects, understanding this energy demand is critical to planning future capacity and ensuring reliable power delivery.

I’m Bill French Sr., Founder and CEO of FDE Hydro, where we’ve spent years developing modular hydropower solutions that deliver reliable, clean baseload power—exactly the kind of stable AI energy resource that data centers and AI infrastructure desperately need. After five decades building critical infrastructure and participating in the Department of Energy’s strategic planning for next-generation hydropower, I’ve seen how energy challenges can make or break technological progress.

AI energy resource helpful reading:

From Chatbots to Power Grids: Quantifying AI’s Voracious Energy Demand

Every time you ask an AI chatbot a question, you’re tapping into an energy infrastructure that rivals small cities. The numbers behind this technological revolution tell a story that most people never see—and it’s happening faster than anyone expected.

The International Energy Agency has been tracking this surge, and their projections are eye-opening. According to the IEA, data center electricity use will double by 2026, reaching 1,000 terawatt-hours. To put that in perspective, that’s roughly equivalent to Japan’s entire electricity consumption today.

This isn’t just about a few server rooms humming away in the background. We’re talking about a fundamental shift in how our electrical grids need to operate. The AI energy resource challenge is reshaping infrastructure planning from the ground up.

The Drivers of Consumption: Training vs. Inference

Here’s something that surprises most people: there are actually two very different ways AI consumes energy, and understanding the difference matters.

Training a model is like teaching someone a new skill from scratch. You’re feeding massive amounts of information into the system, running calculations millions of times over, adjusting and refining until the AI “learns” what you want it to do. This process is incredibly energy-intensive. Training a large language model can consume 50 gigawatt-hours of energy—enough to power a major city for three days straight.

But here’s the twist: once that training is done, the real energy drain is just beginning.

Inference is what happens when you actually use the AI—when it answers your question, generates an image, or writes that email for you. Each individual task might seem small, but we’re now doing billions of these tasks every single day. In fact, experts estimate that 80–90% of computing power for AI is now spent on inference, not training. The AI energy resource demand from inference alone is reshaping data center design.

The type of task makes a huge difference too. A single ChatGPT query consumes 10 times the electricity of a Google search. Text generation is actually the most efficient AI task. Image generation requires significantly more power. And video generation? That’s where things get truly demanding—generating just a 5-second video can consume around 3.4 million joules of energy.

Think about using AI throughout a typical day: 15 text queries, 10 image generations, and 3 short videos. That single day of AI use consumes about 2.9 kilowatt-hours of electricity. Now multiply that by millions of users worldwide, and you start to see the scale of the challenge.

A Staggering Scale: AI’s Consumption in Context

Let’s talk about what these numbers actually mean for our infrastructure.

In 2023, data centers consumed 4.4% of U.S. electricity. That’s already substantial, but what’s coming next is what keeps energy planners up at night. By 2028, that share could triple. AI alone could consume as much electricity annually as 22% of all U.S. households.

Here’s another way to think about it: data centers in the U.S. used somewhere around 200 terawatt-hours of electricity in 2024. That’s roughly what it takes to power Thailand for an entire year. Within those data centers, the AI-specific servers consumed between 53 and 76 terawatt-hours.

The projections for 2028 are even more striking. Power dedicated to AI-specific purposes in the U.S. could rise to between 165 and 326 terawatt-hours per year. We’re not talking about modest growth—this is exponential expansion happening in real time.

This rapid change of data infrastructure into a massive power challenge demands immediate attention and smart solutions. The question isn’t whether AI will continue growing—it’s whether our energy infrastructure can keep pace sustainably.

That’s where reliable, clean AI energy resource solutions become critical. The technology sector needs baseload power that’s available 24/7, not intermittent sources that fluctuate with weather conditions. This is exactly the kind of infrastructure challenge that modular hydropower was designed to solve.

More Than Just Electricity: The Hidden Water and Waste Footprint of AI

When we talk about AI’s environmental impact, electricity usually takes center stage. But here’s the thing: electricity is just the tip of the iceberg. Behind every AI query lies a complex infrastructure with a footprint that extends far beyond the power meter. We’re talking about massive water consumption, mountains of electronic waste, and the mining of rare earth minerals from some of the most fragile ecosystems on Earth.

These hidden costs matter tremendously when we’re planning sustainable AI energy resource infrastructure. They affect communities, ecosystems, and the long-term viability of AI itself. Let’s pull back the curtain and look at what’s really happening.

The Thirst for Data: AI’s Shocking Water Usage

Here’s something most people don’t realize: data centers are essentially giant heat generators that need constant cooling. And cooling at this scale? It requires an enormous amount of water.

The numbers tell a startling story. Google’s data centers used 20 percent more water in 2022 than in 2021. Microsoft saw an even steeper jump—their water consumption rose by 34 percent in the same period. These aren’t small increases. We’re talking about millions upon millions of gallons.

Looking ahead, the projections are even more concerning. According to research, AI infrastructure may soon consume six times more water than Denmark—a country of 6 million people. Think about that for a moment. The cooling systems for our AI tools could rival the entire water needs of a nation.

This creates real problems for real people. In communities across the country, data centers compete directly with local water needs. Agriculture suffers. Drinking water supplies get strained. Natural ecosystems that depend on consistent water levels face disruption. Take The Dalles, Oregon, as an example—a town that’s seen how data center water demands can strain local resources and spark community tension.

The location of data centers and the cooling technologies they use aren’t just technical decisions. They’re choices that ripple through entire communities and ecosystems. When we plan AI energy resource infrastructure, we need to think about water just as carefully as we think about watts.

From Mine to Landfill: The Hardware Lifecycle

The environmental story of AI starts long before any model gets trained. It begins in mines around the world, where rare earth minerals are extracted—often at tremendous environmental and social cost.

Here’s a sobering fact: making a 2 kg computer requires 800 kg of raw materials. That’s right—for every two kilograms of hardware sitting in a data center, 800 kilograms of earth had to be moved, processed, and refined. The ratio is almost unbelievable, but it reflects the complex manufacturing process and the diverse materials needed for modern computing equipment.

These materials include rare earth elements whose mining can devastate landscapes, contaminate water supplies, and create serious human rights concerns in mining communities. The extraction process itself is energy-intensive and often involves hazardous chemicals.

But the environmental burden doesn’t end once the hardware is built. AI technology advances so rapidly that servers and processors become obsolete quickly. This creates a growing mountain of electronic waste—or e-waste—that contains mercury, lead, cadmium, and other hazardous substances. When not properly recycled, these toxins leach into soil and groundwater, creating long-term environmental hazards.

The full lifecycle impact of AI hardware—from extraction to manufacturing, through years of energy-hungry operation, to eventual disposal—represents a substantial environmental burden. More on the e-waste crisis reveals just how serious this challenge has become.

When we develop comprehensive AI energy resource strategies, we can’t focus solely on operational electricity. We need to account for the carbon emissions from manufacturing, the water consumed in production and cooling, and the waste generated at every stage. Only by seeing the complete picture can we build truly sustainable AI infrastructure.

The Transparency Void: Why We Can’t Accurately Measure AI’s Impact

Here’s the uncomfortable truth: we don’t really know how much energy AI is using. Not precisely, anyway. And that’s a serious problem when we’re trying to plan for the future of our AI energy resource infrastructure.

It’s like trying to budget for a household where half the family refuses to tell you what they’re spending. You can see the credit card bill climbing, but you can’t pinpoint where the money’s actually going.

The Problem with “Hearsay” Numbers

Most technology companies treat their energy usage data like a closely guarded secret. They’ll cite competitive concerns or proprietary information, but the result is the same: researchers and infrastructure planners are left in the dark. This forces scientists to reverse-engineer estimates based on limited information, leading to what one group of researchers aptly called “hearsay numbers.” A 2022 paper highlights the difficulty for data scientists in accessing GHG impact measurements.

Even when we can analyze open-source models, measuring the full power draw is remarkably complex. We might know how much energy the specialized processors consume, but what about the central processing units? The cooling systems? The memory modules? All the auxiliary equipment that keeps these systems running?

Microsoft research on approximating total power draw suggests that doubling the graphics processor energy consumption can give us a rough approximation of total power draw for inference tasks. But it’s still just that—a rough approximation, not a precise measurement.

Initiatives like the AI Energy Score are trying to rate models on energy efficiency, which is a step in the right direction. But their effectiveness is severely limited when closed-source companies simply choose not to participate. Without mandatory reporting standards, these voluntary efforts can only accomplish so much.

The Need for Greater Transparency

The lack of disclosure creates real challenges for anyone trying to plan reliable AI energy resource infrastructure. Most companies running data centers won’t reveal what percentage of their energy actually goes toward processing AI workloads. Google stands out as a rare exception, acknowledging that machine learning accounts for somewhat less than 15 percent of its data centers’ energy use. But this level of openness is the exception, not the rule.

The situation gets even murkier when we consider carbon intensity. A data center powered by renewable hydropower has a vastly different environmental impact than one running on coal-fired electricity. Yet without transparency about the energy mix powering these facilities, we can’t accurately assess the carbon emissions associated with AI.

We’ve observed that the carbon intensity of electricity used by data centers was 48% higher than the U.S. average. That’s a significant difference, and it matters enormously for environmental planning. Data centers tend to locate in regions with abundant, cheaper power—but that power frequently comes from grids heavily reliant on fossil fuels.

This is why we urgently need standardized reporting mechanisms. Not voluntary guidelines that companies can ignore, but mandated transparency requirements that give infrastructure planners, policymakers, and the public the information they need to make informed decisions about our energy future. Without this transparency, we’re essentially building critical infrastructure in the dark, hoping our estimates are close enough. That’s not a strategy—it’s a gamble.

Taming the Beast: Can We Make AI a Sustainable AI Energy Resource?

The energy challenge facing AI is massive, but it’s not impossible. I’ve spent decades working on infrastructure projects, and I’ve learned that the biggest challenges often have practical, achievable solutions. The key is approaching them strategically, with the right tools and technologies.

Making AI sustainable requires us to think bigger than just plugging data centers into cleaner power sources. It demands a fundamental shift in how we design, power, and operate the infrastructure that makes AI possible.

The Hidden Costs Beyond Electricity: A Broader AI Energy Resource Footprint

The path to sustainable AI runs through multiple interconnected strategies. Researchers and engineers are developing more efficient algorithms that can perform the same tasks while consuming less energy. This means smarter code that doesn’t waste computing power on unnecessary calculations. At the same time, we’re seeing innovations in hardware design that allow processors to do more work per watt of electricity consumed.

Data center design itself is evolving rapidly. Modern facilities are implementing advanced cooling technologies that reduce or eliminate water consumption, optimizing how servers are used to minimize idle energy waste, and even capturing waste heat for other productive uses. These improvements can dramatically reduce the environmental footprint of AI operations.

Interestingly, AI can also be part of the solution to our broader energy challenges. When properly deployed, AI can optimize power grids, predict energy demand with remarkable accuracy, and manage distributed energy resources more effectively than traditional systems. AI is being used to map destructive dredging and even to help reduce contrails from aircraft. The technology that’s creating our energy challenge can also help us solve it.

But here’s where we need to be careful. There’s a phenomenon called the Jevons paradox: when technology becomes more efficient, we often end up using more of it, not less. If AI becomes incredibly efficient and cheap to run, companies might simply deploy it more widely, potentially canceling out the efficiency gains. This means efficiency alone isn’t enough—we need sustainable energy sources powering these systems from the start.

The Renewable Revolution: Powering the Future AI Energy Resource

Here’s the fundamental challenge: data centers need power 24 hours a day, 7 days a week, 365 days a year. There are no breaks, no downtime, no “we’ll wait until the sun comes out” periods. This creates a unique problem for renewable energy planning.

Solar panels don’t generate electricity at night. Wind turbines sit idle when the air is still. These are excellent technologies, but they can’t single-handedly meet the constant, unwavering power demands of AI infrastructure. What we need is stable, baseload renewable energy that can deliver consistent power around the clock.

This is where hydropower becomes absolutely critical. Unlike intermittent renewables, hydropower provides continuous, dispatchable power that can respond instantly to changing grid demands. It’s the kind of reliable, clean energy that AI energy resource planning desperately needs.

At FDE Hydro, we’ve developed microgrid solutions using our modular French Dam technology that can deliver this exact kind of stable, renewable power. Our precast concrete systems dramatically reduce the cost and construction time for hydroelectric facilities, making clean baseload power more accessible exactly when we need it most.

Think of hydropower as the anchor that allows other renewables to flourish. When solar and wind are producing, great—use that power. But when they’re not, hydropower fills the gap, ensuring the lights stay on and the servers keep running. This isn’t just theory; it’s why we call hydropower the guardian of the grid. For more on this concept, check out our article on 4 Reasons Why Hydropower is the Guardian of the Grid.

As AI continues to expand, the companies and regions that will succeed are those that plan now for reliable, clean energy infrastructure. The technology exists. The question is whether we’ll deploy it quickly enough to meet the coming demand without compromising our environmental commitments.

Regulation and Rate Hikes: The Coming Reckoning for AI’s Growth

For years, AI development has operated in something of a wild west—innovate fast, worry about the consequences later. But that era is ending. Policymakers around the world are waking up to the reality that AI’s explosive growth comes with serious environmental and economic trade-offs. The question isn’t whether regulation is coming; it’s what form it will take and how quickly it will reshape the AI energy resource landscape.

The Dawn of Regulation

Europe is leading the charge. The EU has approved legislation to temper AI’s environmental impact, marking one of the first comprehensive attempts to hold AI development accountable for its environmental footprint. This isn’t just symbolic—it’s a blueprint that other regions are watching closely.

Here in the United States, we’re seeing similar momentum. The AI Environmental Impacts Bill, currently working its way through Congress, would require federal agencies to assess and publicly report on AI’s energy consumption and environmental effects. It’s an important first step toward transparency in an industry that has historically guarded its energy data like state secrets.

Meanwhile, international standards organizations aren’t sitting idle. The International Organization for Standardization (ISO) is developing criteria for what they’re calling “sustainable AI.” These standards will establish benchmarks for measuring energy efficiency, raw material consumption, and water usage across the AI lifecycle. While some of these initiatives start as non-binding recommendations, they create frameworks that often become industry expectations—and eventually, requirements.

The regulatory landscape is shifting from voluntary best practices to mandatory accountability. Companies that plan ahead for these changes will have a significant advantage over those caught flat-footed.

Who Foots the Bill?

Here’s where things get personal for everyday consumers. The billions being invested in AI infrastructure don’t exist in a vacuum. Someone has to pay for the massive expansion of our electricity grid, and that someone might be you.

Utility companies are negotiating major agreements with technology companies to deliver the staggering amounts of power their data centers demand. But building new generation capacity and upgrading transmission infrastructure costs money—a lot of it. Those costs typically get passed along to ratepayers. A Virginia report estimates potential monthly cost increases for residents as data centers continue their rapid expansion in the state. Virginia isn’t unique; any region experiencing data center growth faces similar pressures.

The financial impact goes beyond your monthly electric bill, though. We need to consider what researchers call “higher-order effects”—the ripple consequences of widespread AI deployment. Take autonomous vehicles, for instance. While self-driving cars might be more efficient individually, some experts worry they could make driving so convenient that people drive more overall, actually increasing total emissions. It’s the kind of unintended consequence that’s hard to predict but important to consider.

There’s also the troubling potential for AI-generated misinformation to undermine climate action. When AI systems can produce convincing but false information about environmental challenges, it becomes harder to build the public consensus needed for meaningful change. These broader societal impacts don’t show up on energy meters, but they’re part of the true cost of our AI future.

The reality is that AI’s growth trajectory is bumping up against hard limits—both environmental and economic. How we steer this tension between innovation and sustainability will define not just the future of AI, but the future of our energy infrastructure and the costs we all bear for it.

Conclusion: Powering the Future of Intelligence, Responsibly

We stand at a crossroads. AI isn’t just another technology trend—it’s a fundamental shift in how we live, work, and solve problems. But here’s the thing: this revolution runs on electricity, water, and raw materials at a scale most of us never imagined.

The numbers we’ve explored aren’t just statistics. They represent real decisions happening right now about our energy future. Every data center that breaks ground, every AI model that gets deployed, every query that gets processed—these are shaping decades of infrastructure investment and environmental impact.

But here’s what gives me hope after five decades in infrastructure: AI energy resource challenges aren’t impossible. We’ve faced big infrastructure problems before, and we’ve solved them. The difference this time is urgency. We don’t have the luxury of waiting decades to get this right.

The solution isn’t to slow down AI development. That ship has sailed, and frankly, we need AI’s capabilities to tackle climate change, optimize resource use, and solve problems we haven’t even identified yet. The real challenge is making sure we power this revolution responsibly.

This means demanding transparency from tech companies about their energy and water usage. It means investing in efficiency at every level—smarter algorithms, better hardware, more efficient data centers. And critically, it means choosing the right energy sources to power this future.

This is where stable, clean, baseload power becomes non-negotiable. Solar and wind are important pieces of the puzzle, but data centers need power that flows 24/7, regardless of weather or time of day. They need the kind of reliable, dispatchable energy that hydropower delivers.

At FDE Hydro, we’ve spent years developing modular precast concrete technology that makes hydroelectric infrastructure faster and more affordable to build. We’re not just selling equipment—we’re providing a crucial piece of the AI energy resource puzzle. Our “French Dam” technology offers exactly the kind of stable, clean power that AI infrastructure demands.

The future of AI and the future of energy aren’t separate conversations. They’re the same conversation. As we push the boundaries of what machines can do, we need to be equally innovative about how we power them. The Biggest Untapped Solution to Climate Change is in the Water, and it’s ready to help us build a smarter, more sustainable world.

The choices we make today about AI’s energy infrastructure will echo for generations. Let’s make sure we get them right.

Learn more about the power of Hydropower.